Gender decoding local job ads

A few days ago a tweet really grabbed my attention. It did so because not only was it something I’d thought a fair bit about in the past, but it also outlined, in wonderfully concise and concrete terms, why women and minorities don’t apply to more senior tech job openings.

The tweet was from Sarah Mei, a Ruby and JS developer based in San Francisco. The first part of her tweet chain is shown below:

First, let's talk about "women & minorities." These are not separate categories! "Folks from under-represented groups" is a better phrase.

— Sarah Mei (@sarahmei) October 18, 2016

First part of a tweet chain from Sarah Mei.

I’m only showing a portion of what she wrote. She went on to talk about other aspects of the problem and I strongly encourage you to visit her original tweet and read through the discussion; I can’t do justice to it’s scope here.

But the second point she made really hit home. I strongly believe that words have incredible power. Even — especially! — in today’s noisy, fragmented media landscape, a single word can still affect someone to their core. The idea that the choice of words in a job posting can influence someone’s life decisions is a bit intimidating, but definitely worth a closer look.

As luck would have it, Communitech, the local high-tech advocacy juggernaut of awesomeness, provides a job board. Wouldn’t it be interesting to get a data point for Sarah Mei’s second assertion — do Kitchener-Waterloo-Cambridge-Guelph job ads use words that are “coded white, male, and young”? Are we screwing up perhaps the first touch point between our companies and awesome candidates?

And we’re off…

You may have noticed that Sarah Mei references a baseline implementation of an application to analyze a job posting (with code), written by Kat Matfield. That’s a good place to start.

I created a Chrome Extension (based on Kat Matfield’s code — thanks! — but with some changes; see below) and used it to help ‘gender decode’ all of the job ads on waterlootechjobs.com (the Communitech job board) posted between September 20, 2016 and about midday October 20, 2016. In total, 190 job ads were analyzed.

The extension (and Kat’s gender-decoder web page) analyzes the word distribution of the job posting, relative to two word lists (one list contains masculine-coded words, the other feminine-coded words). The word lists are taken from an academic paper entitled Evidence That Gendered Wording in Job Advertisements Exists and Sustains Gender Inequality. Based on the relative frequency of masculine and feminine words, the job posting is flagged as one of five categories (associated descriptions, as provided by Kat Matfield’s web app, are also provided below):

- strongly masculine-coded (“This job ad uses more words that are stereotypically masculine than words that are stereotypically feminine. It risks putting women off applying, but will probably encourage men to apply.”);

- masculine-coded (uses the same description as strongly masculine-coded, above);

- neutral (“This job ad doesn’t use any words that are stereotypically masculine and stereotypically feminine. It probably won’t be off-putting to men or women applicants.”);

- feminine-coded (“This job ad uses more words that are stereotypically feminine than words that are stereotypically masculine. Fortunately, the research suggests this will have only a slight effect on how appealing the job is to men, and will encourage women applicants.”);

- strongly feminine-coded (uses the same description as strongly feminine-coded, above).

I think that the descriptions of each of these categories are quite good. If you skimmed over them, take another look, because there are two great bits of information you may have missed. First, the bad news:

Second, a bit more optimistic:

Well then.

The first time I was asked to interview a candidate was to fill in for a colleague who was ill that day. I was barely a year out of university and I wasn’t as prepared as I usually like to be; I remember it being more stressful for me than it was for the candidate. But I also remember, even as someone having never been on the interviewer side of the table, it being terribly lopsided — the game was rigged in favour of the house! [Shocking, I know.]

Since that first stressful day, I’ve interviewed perhaps 100 people. It has only been during the past seven or eight years, however, that I’ve really felt like I (and, by extension, the companies I work for) could do better with hiring talented people who are a good fit. [Before then I just figured: “Yep, this is the process.”]

I’ll take this opportunity to say that when I have talked to people in human resources, I’ve learned just how much I don’t actually know about what it takes to attract, hire, and retain quality folks. That said, 100 interviews later I know the process of applying for a job (as a candidate) and hiring someone (as an employer) could be better; it could be designed to be better. But successfully designing a solution requires knowing (exploring, discussing, researching — learning about! — and acknowledging) the problem you’re tackling.

An example: at one point in my career I was a member of a team struggling to hire a new developer. The process was taking months, involved more than a hundred applicants (but less than five phone screens and fewer in person interviews), and was frustrating everyone. All this frustration despite spending considerable time redesigning, rewriting, and discussing the job posting. We deliberated individual word choices, went through several drafts and emotionally charged edits, and finally had something we felt was…pretty good! It covered both technical and soft skills. It introduced the range of expectations of a successful candidate as part of the team. It explained how our team worked and how it worked well.

And you know what? We didn’t once talk about how this job posting would be received by women. Or minorities. Or, as Sarah Mei calls them, “under represented groups”. That was a lesson in designing blind/designing with bias — how could we be confident in our designed solution when we didn’t research or understand the problem space adequately?

So, how did tech companies around Kitchener-Waterloo fare?

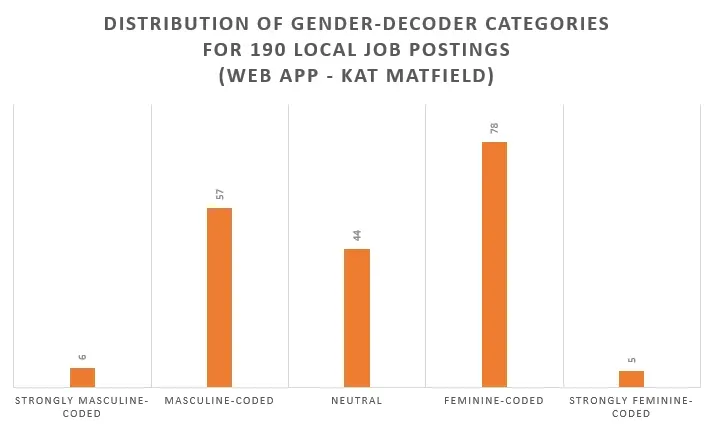

I had my own hypothesis, biased no doubt by my experience living in a very white, very male tech career bubble for two decades. Here is the breakdown of the analysis of 190 job postings using Kat Matfield’s web app:

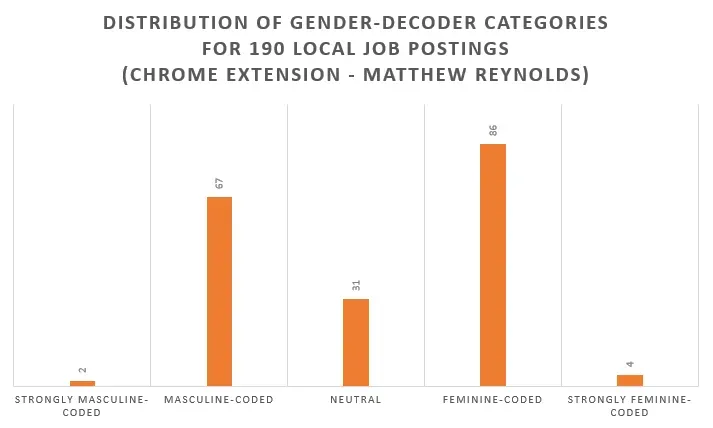

Here is the breakdown of the analysis of the same 190 job postings using my Chrome Extension:

While the distributions are similar at first glance, they aren’t the same. Why is that? Well, during the port of the web app code to a Chrome Extension, I noticed differing categorizations between the two — it appeared as if the web app wasn’t handling multiple instances of the same word consistently and some words weren’t getting picked up at all. The idea was the same, but we ended up disagreeing on 46 of the 190 categorizations. [I’ve reached out to the author of the web app to see if I just misunderstood something about the algorithm. Once it gets sorted, I’ll post my code. Until then, assume there is a big asterisk on my results.]

Mine is very rudimentary research by any measure: I used a single method to inspect one aspect of a small, localized data set. The validity of that method is certainly open to discussion. Do the word lists make sense? Are there false hits which would be eliminated when put into context by a human or more mature tool? Etc.

But it was invigorating to use technology to better understand — if only a bit — a relevant social problem in the very industry I’m happily part of. As someone who thinks a diverse team is a stronger team, this experiment made me think about the problem and how we can improve the situation.

And, hopefully, you’re now thinking about that too.

Postscript

The purpose of this article is to stimulate conversation around the issue of diversity, opportunity, and equality in the high tech industry. But where could one run with this sort of experiment?

It would be interesting to explore the impact of building similar, more mature analysis tools directly into job sites such as Communitech’s Waterloo Tech Jobs site (or, on a larger scale, sites like LinkedIn). Would companies, when presented with such analysis, become more aware of the impact of their choice of words? Would organizations better appreciate the variables involved in attracting under-represented groups?

And what might we find if we had access to historical postings on job sites? Would there be trends worth exploring? Are we getting better or worse in this particular aspect? Are some technical industries or role postings predisposed to wording which is off-putting to under-represented groups?

I purposefully didn’t try to find a pattern as to which sorts of job postings were at one extreme of the categorization range or the other. I’m not sure what that would accomplish and I’m sure that it wouldn’t be significant (from a statistical point of view). But my Spidey Sense™ sure was tingling when reading some of the posts, for lots of reasons.

This exploration addresses one facet of a much larger, complicated problem. But can we use design and technology to fix that problem? So much to think about; I’m off to read (and not just more job postings)!